I’m trying to load a comlpex and deeply nested xml data into foundry. I have tried to use pipeline builder and given an example of the xml but the sample of the xml doesn’t contain the entire xml schema. I do have a XSD file that I can valid the schema but I don’t see where I can load the XSD schema into pipeline builder to have pipeline builder generate the schema use for parsing. I tried to use code repo but I’m not having luck as the schema is complex and deeply nested. Where can I get documentation or help on this subject?

You can use spark-xml (documentation at https://docs.databricks.com/en/archive/connectors/spark-xml-library.html) in Code Repositories, which includes support for automatic schema inference in addition to (currently experimental) support for generating a schema from an XSD file via the com.databricks.spark.xml.util.XSDToSchema function. It doesn’t look like there’s a great way for calling this function from Python, but Stack Overflow has a helpful example of how you could call it from a local spark-shell (you could take the output of that and paste it into the repository, etc.).

Specifying an explicit schema is more robust (and performant) than leveraging automatic schema inference, especially if you will be processing the data incrementally and different batches of incremental files will have different subsets of the full XSD schema. However, in a simple snapshot pipeline on relatively small data, automatic schema inference may be the most straightforward way to achieve your goals.

Here is a minimal example that uses automatic schema inference (the input is a dataset containing the books.xml file as linked from the spark-xml documentation). See that documentation for how to adapt this to use an explicit schema, if desired.

from transforms.api import transform, Input, Output

from transforms.verbs.dataframes import sanitize_schema_for_parquet

@transform(

output=Output("/path/to/output"),

raw=Input("/path/to/input"),

)

def compute(ctx, output, raw):

filesystem = raw.filesystem()

hadoop_path = filesystem.hadoop_path

files = [f"{hadoop_path}/{f.path}" for f in filesystem.ls()]

df = (

ctx

.spark_session

.read

.format("xml")

.option("rowTag", "book")

.load(files)

)

output.write_dataframe(sanitize_schema_for_parquet(df))

The only other tricky bit is how to add the spark-xml dependency. You can do so by adding the following piece of code at the bottom of the hidden transforms-python/build.gradle file (you can modify the version component of the dependency specification as necessary, but the below worked as-if for me in a fresh transforms-python repository in my environment).

dependencies {

condaJars 'com.databricks:spark-xml_2.13:0.18.0'

}

Thanks for your reply. But I’m having issues with the xml formatting when the closing tag and opening tag are on the same line.

Example

but if the file had it like

it would be ok. I’m looking for an option to allow the closing tag and opening tag to be on the same line. Is it possible ?

my code

xml_df = spark.read.format(“com.databricks.spark.xml”)

.option(“rowTag”, “Vendor”)

.load(xml_input.filesystem().hadoop_path)

spark-xml is an open-source library not provided by Palantir, so I don’t think that this forum is the appropriate place to go into that level of detail on how to use it. If you’re having trouble getting the library to do what you want, you should file an issue or pull request on the GitHub repository (https://github.com/databricks/spark-xml).

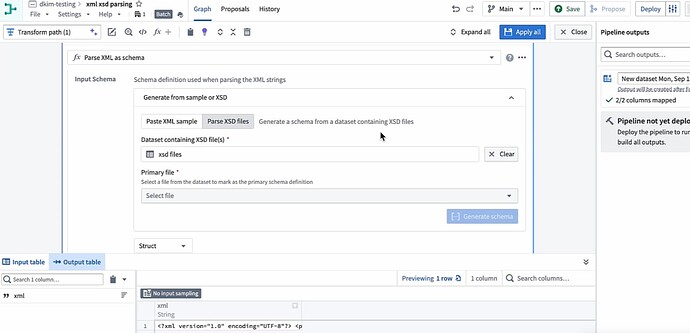

Hey @rmahadeen ! Pipeline Builder now supports parsing XML with XSD files:

You can now parse your XMLs in Pipeline Builder using XSD files. Simply import the XSD file into Foundry and select it for your input schema.